In this short analysis P. Atkinson highlights the uncertainties associated with the field of evidence-informed policy making, especially in crisis situations such as Covid-19.

The COVID-19 pandemic experiences of many different countries have shown how an exceptional need for research evidence has led to rapid new deployments of scientists in advisory roles to aid policymakers in making evidence-informed decisions. (1) This has helped us learn how science advisors experience, and try to manage, the challenges of insufficient, evolving, and conflicting evidence as they work to inform public health decision-making. Of course a fast-moving crisis like Covid-19 obviously poses some very specific challenges to evidence-informed policy making, but I try here to draw out more general messages as well.

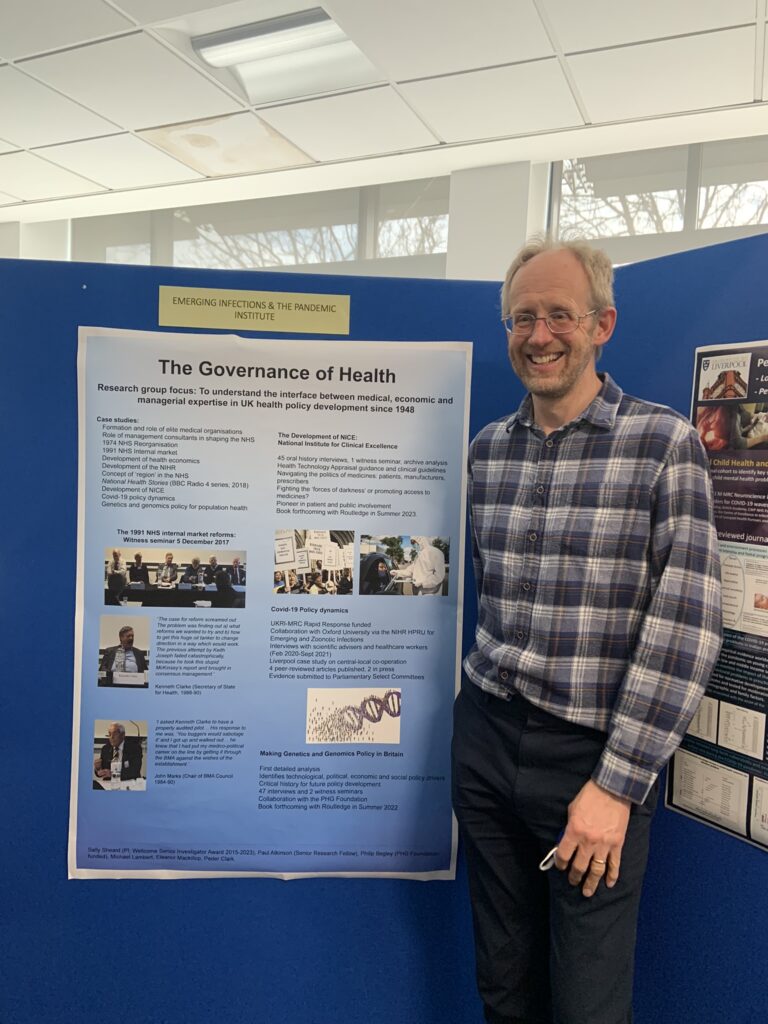

My own work in the UK focussed on capturing scientific advisers’ experiences in real time by means of repeated short telephone interviews. (2) We found that a rhetoric of ‘following the science’ or ‘evidence-based policy making’ pays insufficient attention to the meaning of these phrases or how they are operationalised. The case of the UK helps us think through what it means to use evidence for policy by offering a series of generalizable messages to sum up key qualifications or difficulties. First, we found that many scientists sought guidance from policy makers about their goals: they wanted to focus their work where it will be used. Yet cases like Covid-19 demonstrate the absence of a clear lead from policy makers, and a tendency to change course quickly. Second, we showed that many scientists did not want to offer policy advice, which suggests that (for them) policy could never simply be ‘evidence based’. What they wished to provide instead was evidence to inform values-based policy choices. Finally, we noted the range of knowledge that informed the UK’s pandemic response, examined which kinds of knowledge were privileged, and demonstrated the absence of clarity on how government synthesised the different forms of evidence being used.

Those findings led to the conclusion that it is easy – and wrong – to advocate evidence-based policy making. Most of those who are closest to the policy process are deeply sceptical about this notion, or at least demand clarity about what the words mean. If ‘evidence’ is confined to the products of scientific research, or the work of ‘experts’ more generally, and ‘based’ means that policy is supposed to be constrained by these, this amounts to a dangerous technocratic attack (whether intended or not) on democratic accountability. I have argued elsewhere that we misunderstand the nature of science if we think it even can – let alone should – show us what to do. Science is a method for understanding the world rather than one for making decisions: for uncovering ‘what is’ rather than deciding ‘what ought’. Science cannot, for example, weigh the economic and social harms of a stricter lockdown against its infection control benefits: the answer to that problem lies in our values, not in knowledge.

Many of the more critical thinkers about science and policy have therefore moved on from ‘evidence-based policy making’ to ‘evidence-informed policy making’. This is definitely an improvement: in words attributed to Winston Churchill (though he was almost certainly not the first to use them), science should be ‘on tap, not on top’. Paul Cairney’s impressive analysis of what science advisers do, rather than what we think they do (or should do) shows that their inputs range along a continuum from ‘minimal’ guidance – helping resolve uncertainties of fact – to ‘maximal’ guidance – helping reduce ambiguity about how to define and solve the policy problem. (3)

Most science advisers with whom I have spoken about Covid-19 believe in the utility of evidence-informed policy making. But even evidence-informed policy making has its challenges. One big question is what qualifies as evidence. And what evidence is best? In evidence-based medicine, the received wisdom is that the ‘hierarchy of evidence’ has the results of randomised controlled trials at the top, not without reason given the deep scholarly attention to trial design since the 1950s, which has sought to avoid the contamination of results by confounding factors. (4) Some biomedical scientists would like health policy makers to give greatest attention to trial results too. Certainly when it comes to decisions about Covid-19 treatments, most us would prefer medicines validated by trials to the medicines advocated – on evidence whose nature was at best unclear – by Donald Trump.

But most health policies are about more complex questions, with a bigger social dimension, than the selection of medicines. One cannot conduct a randomised controlled trial of most types of health policy intervention. In the Covid-19 response, policy makers had to weigh the infection control benefits of non-pharmaceutical interventions such as stay-at-home mandates (‘lockdowns’) against the socio-economic harms they simultaneously caused, from the loss of incomes and education to the impacts on mental health. Most countries now appear to have regrets about some of their choices, but to do better with the available information, we would need more sophisticated approaches to the selection and weighting of our evidence.

Scientists tend – naturally enough – to feel frustrated at not seeing the impact of their advice. Our enthusiasm for the use of evidence extends to wanting evidence about how policy makers used the evidence we gave them. They are likely to disappoint us. (5) An expert adviser in the USA spoke for many:

“I observed in a lot of interactions with government decision-makers, […] that they ask what you think, and then they do something and they never tell you how, if at all, what you told them influenced or didn’t influence their decision or what else influenced them. They don’t even tell you the decisions. They say, “Thank you very much”, and you’re done, and you have to reconstruct it yourself.”

Vickery et al. ‘Challenges to Evidence-Informed Decision-Making in the Context of Pandemics – The Case of COVID-19: Qualitative Study of Policy Advisor Perspectives’ (Forthcoming), BMJ Global Health.

As a former civil service policy analyst I can see both sides of the argument. Transparency about how policy decisions are reached has clear advantages for the quality of public policy (and presumably for trust in politicians), but you can have too much of a good thing. Advisers are likely to tell the truth to policy makers more freely when provided with confidentiality, for example to ‘think the unthinkable’. A Covid-19 example of that would be the modelling of worst-case scenarios. The arguments for and against going public early with worrying model results have been well rehearsed, and I come down on the side of caution. (6) And pragmatically, any well-intentioned attempt to force greater transparency on reluctant policy makers generally makes them find a new private channel for the discussions they want to keep private. The unsatisfactory implication is that sometimes science advisers are never going to know whether what they said made a difference. At least we can build safeguards into the science advice machinery to avoid this where possible.What, then, to do? There is now plenty of sound advice available to the producers of expert evidence about how to share it with policy makers: it would be superfluous to add my voice to this here. (7) In summary, this advice says experts need to wise up about policy and politics and pick one of the ‘expert’ roles actually available, not waste time attempting the impossible (for example completing a research project and then driving policy change based on its findings). Understand your audience: policy makers in most countries are not generally perverse, stupid or corrupt. They do base decisions on evidence: our problem is when it is not the kind of evidence we favour. Alongside expert advice they value the opinions of trusted contacts and pay attention to dominant discourses in the media and public opinion. That’s democracy. None of us, when we think about it, really want to live in a society controlled by scientists.