Plinio Casarotto takes a look at the future of publishing.

The scientific community has been flooded with well-intended ideas and concrete attitudes to tackle the openness in science, or better its lack of openness. More specifically, attempts to guarantee access to otherwise ‘protected’ material have been the obsession of many (mostly early-career) researchers. Recently, services like Sci-Hub and Unpaywall try to break the oligopoly of big publishers, legally or not, or find ways to make free versions of otherwise ‘protected’ material, which is a relief to many researchers in African, Asian and Latin American countries. The latest ‘no más’ came as a series of refusals from many library consortiums to renew their subscriptions with the publishing giant Elsevier. The most famous is the still ongoing dispute between German institutions and Elsevier; and another notorious case was between Swedish universities and the same publishing ‘Behemoth’. Sweden ‘out-of-the-blue’ cut the cords with Elsevier, and reports so far indicates no big problems to their institutions1. In the case of Finland, the dispute ended with a series of ‘concessions’ to publish open-access in selected Elsevier journals (around 1800) so as ‘discount’ in the subscriptions. This frankly sounds to me that Finnish universities got some crumbs of the table, and are happy. A similar deal was achieved with the Dutch universities.

Examples like those described will keep happening as long as we insist in having a model that largely benefits private above public interests, and the case of scientific publication is a textbook of wealth transfer from public sources to private pockets. Elsevier, subsidiary of RELX group, responds to 16% of the total scientific publications’ ‘market’ (yes, it is in quotes because it shouldn’t be treated as one), with a 2017 revenue of a little bit more than 2.4 billion dollars and an average profit margin of 37%. The justification is: peer review is necessary to guarantee scientific accuracy and the ‘distinction between fact and knowledge’. The argument sounds plausible, if the publishers actually did all the work. The model relies on scientists writing, reviewing and editing the studies, mostly for free. The publishers play a ‘middleman’, bringing the editors, referees and authors in the same platform. Once approved, manuscripts get a ‘makeup’ and are indexed in searching platforms. At this point comes another fallacious argument: the publishers’ cost coverage has to come from somewhere. Once plausible in the era of ‘printed copies’, the justification no longer stands in an increasing online system, although costs still exist they are a fraction of the printed versions. Today, this sounds like publishers are selling a solution to a problem they created: we still print journals (which are expensive), so you have to pay (a lot) for them, even if you use the electronic version only.

The proposal is simple: break the ‘middleman’, ie break the journals. The whole system finds its raison d’être in the ‘need’ for journals to communicate and archive peer-reviewed scientific findings. Move the whole peer-review process away, to a centralized platform, maintained and administered by public funding (for a fraction of what costs to pay the publishers to do it) and all the justification for the publishers’ oligarchy vanishes. These platforms are already in use and gaining momentum: preprint repositories. As an example the cost of arXiv maintenance, one of the biggest servers, is on the range of 1 million dollars per year2. Just to give a perspective, Finland alone paid 35 million euros in 2016 (last finalized data collection available from Finnish libraries consortium) to subscription journals (80% to the Top 5 publishers), out of it 10 million went to Elsevier3.

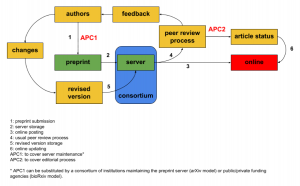

Preprint servers already host the material, they already have access to researchers in each topic (who I bet are willing to keep giving their time to revise colleagues work) and they are already, mostly, public funded (or in a mixed model, as for bioRxiv). Once submitted or ‘posted’ to the preprint server the authors could pay a small fee (as calculated by Jon Tennant, a real article-processing fee should be around 100 USD), and the manuscript goes to peer-review, given that all relevant information and data are also available to scrutiny. Alternatively, this could be free to authors if the server is maintained by an external funding source (as mentioned above, public, private or mixed). If the authors decide to address all the queries from reviewers and the manuscript is approved, it gets a stamp as reviewed, and gains article ‘status’. At this point a fee could be introduced to cover editorial costs, mainly to fund full-time editors. The authors can appeal of the reviewers decision or deny the revision, which means the study stays at preprint level, however still openly accessible (please see the workflow below).

The ‘shock-absorption’ mechanism would rely on the ability of a newly-formed university consortium (and the integration of EU-institutions provides a unique chance of success, given that many universities and institutes are funded by EU-grants), quickly move towards such central platform, carrying the editorial expertise (i.e., scientists) from the journals and publishers. However, a transitional or buffered period, where the researchers serve at both systems is a viable option. In this scenario, the manuscript follows the usual flow but once accepted is immediately made available to the consortium repository. Small-scaled and pilot experiments can be performed using small preprint platforms (only in the sense of the number of submitted material, not their importance), such as paleoRxiv or Scielo. If successful, a pan-European or a global platform is the next obvious step.

Risking to sound too idealistic, the most important consequence of such ‘radical’ idea is to get the spin-offs and products of scientific process back to the common good, where it belongs.

The main obstacles as I see to implement this proposal are:

1) to change the mindset that journals are a necessary step to peer-review publications, and

2) that publishers play any relevant role in the peer-review process.

Not to mention the obvious dismissal of any proposal that reduces the power of publishers as ‘unrealistic’ or ‘unachievable’, of course the ones with the most to lose in the process will complain and whine.

However, publishers will not be ‘left in the snow’, they can maintain and improve the common platform, given that all the code and servers have mirrors/hosts publicly available. The publishers can also check the text for grammar and typing errors (especially considering that a significant share of authors are not native English speakers), and give the final touches to the manuscripts (lay-out, various digital formats, references, figures and art), but the days of blatant exploitation of public resources for the shareholders profit and copyright retentions would be over.

Similar initiatives are available from private publishers. One good example is the model used by PeerJ and F1000Research, where preprints and peer-reviewed papers are hosted by the same publishers. The bottom line proposal is to expand this model at a ‘supra-national’ level, to encompass all fields of science and back it with public resources, thus reducing the costs. This model will guarantee the free and unrestricted access to anyone, of any step of the scientific process, which is nothing more than our duty as scientists to implement the means to it.

Note: I would like to thank to librarian Marjo Kuusela for the information regarding the costs of subscription in Finland; and to Victor Venema, from University of Bonn, for the exchange of ideas and help in editing the text.